About

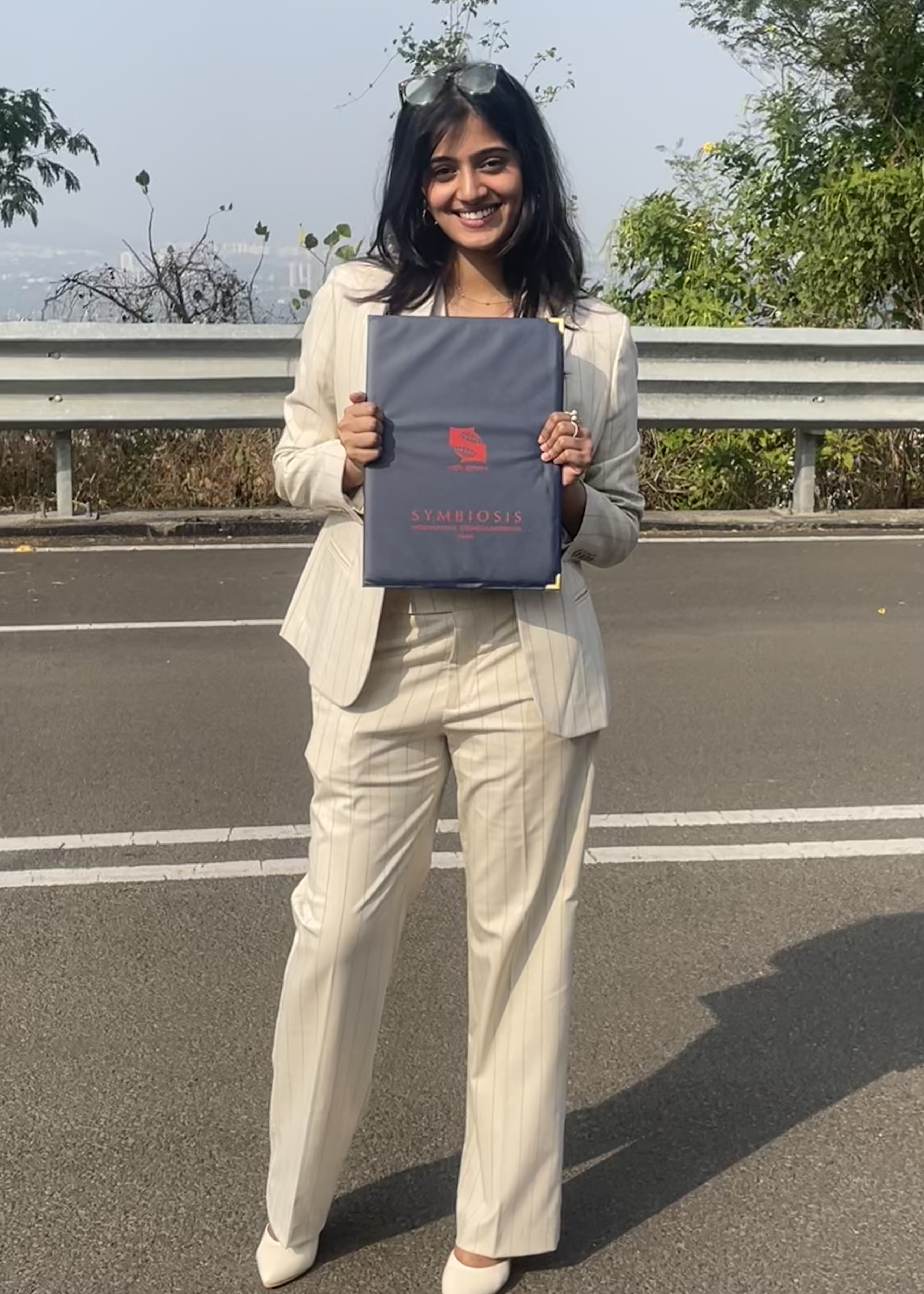

Nikita Chaudhari

(nih-kee-tah)

I'm studying AI at Carnegie Mellon SCS!

About

Nikita is a Master’s student in Artificial Intelligence at Carnegie Mellon University, where she has excelled in building robust machine learning models and developing scalable software solutions. Her strong foundation in software engineering and AI positions her to thrive as an intern any place she works this summer.

Expertise:

Artificial Intelligence & Machine Learning, Software Engineering, Data Engineering, MLOps, Research

Skills

I can do:

Skills

1 - Languages

- Python

- Java

- C & C++

- SQL

- HTML & CSS & Javascript

3 - Libraries

- Pandas

- Numpy & Matplotlib

- SciPy

- NLTK

- OpenCV

- Selenium & BeautifulSoup & Scrapy

- Flask & Django

- Selenium

2 - Frameworks

- PyTorch

- HuggingFace

- Scikit-learn

- Tensorflow

- Keras

- FastAPI

- Tkinter

- Apache Spark

4 - Tools

- Git

- Github & GitLab

- pip & conda

- Docker & Kubernetes

- VSCode

- Jupyter Notebook

- Jira

Coursework

Classes I've taken at Carnegie Mellon & Symbiosis

Academic Coursework

* CMU Grad Summer 2024

* SIU Undergrad

- 0302: Data Structures & Algorithms

- 0702: Compiler Construction

- 0505: Theory of Computation

- 0709: Neural Networks

- 0502: Computer Vision

- 0715: Natural Language Processing

- 0603: Design Thinking

- 0304: Computer Organization

- 0305: Digital Electronics & Logic Design

- 0403: Operating Systems

- 0504: Database Management Systems

- 0518: Introduction to Image Processing

- 0524, 0525, 0635: Machine Learning: Classification, Regression, Clustering & Retrieval

- 0614: Human Computer Interface

- 0608: Internet of Things

- 0703: Big Data, Hadoop & Apache Spark

- 0604: Cyber Security

* CMU Grad Fall 2024

Works

Published Work

Portfolio

Smarter Summaries: NLP for Research Papers

Developed a hybrid model leveraging abstractive and extractive summarization to distill insights from paper intros.

Attained a final weighted average score of 5.8, nearly matching BERT’s result of 6.88 and outperforming BART & T5, their total was 4.8 and 5.4, as evaluated through ROUGE, BLEU, and author evaluation metrics.

Comparative Analysis Of Indian Sign Language Recognition Using Deep Learning Models

Built an Indian Sign Language (ISL) dataset and researched five deep learning models for ISL-to-text translation, employing image processing techniques to compare Densenet, Inception, ResNet, VGG19, and VGG16.

Achieved optimal results with ResNet-50, attaining 98.25% accuracy and 99.34% F1-score; InceptionNet V3 was least favorable with 66.75% accuracy and 70.89% F1. Findings documented in the Forum for Linguistic Studies.

Improving the Performance of Autonomous Driving Through Deep Reinforcement Learning

Developed and trained an autonomous car navigation model using reinforcement learning algorithms, including PPO, DQN, and DDPG.Conducted comprehensive analysis of algorithm performance based on speed, accuracy, and reliability.

Published findings in Sustainability, MDPI, showcasing DQN’s superior performance in short training durations and highlighting potential enhancements with E-decay techniques. Identified areas for further optimization of PPO under extended training conditions.

Projects

Projects

Portfolio

Phoneme Recognition with Neural Network

Developed a Multilayer Perceptron (MLP)-based speech recognition model to classify phoneme states from Mel spectrograms.

Implemented data preprocessing, context padding, and feature extraction to optimize performance on phoneme classification.

Fine-tuned model architectures and hyperparameters (e.g., activation functions, dropout, batch size) to maximize accuracy in a Kaggle-hosted competition.

Dynamic Memory Allocator in C

Designed a dynamic malloc in C, incorporating key optimizations like block splitting, coalescing, mini-headers, footers, and free block lists. Reduced fragmentation by 40% and improved memory utilization by 25%.

Drift: Quantifying drift for predictive modeling in medical x-ray imaging - Asher Orion

Designed a drift detection pipeline for chest X-rays(CheXpert and NIH datasets), incorporating a RAG-inspired model to filter in-distribution images and a model to assess input suitability. Currently extending the project with Asher Orion to realize actual industry functionality.

Group Project as a part of Nucleate's BioHacks 2024

Mini-Llama: Lightweight NLP Model

Implemented core components of Llama2, an advanced open-source language model, to gain a deeper understanding of neural language modeling.

Conducted text generation and sentiment classification on the SST-5 and CFIMDB datasets using both zero-shot prompting and fine-tuning approaches.

Utilized pretrained weights from stories42M.pt to enable text completion and classification, optimizing the model’s performance without using transformer-based libraries.

ML-Powered Image Accessibility & Search

Enhanced a web application by integrating ML-powered alternative text generation and image search for improved accessibility and usability.

Implemented automatic alt-text generation for images using pretrained vision models, improving accessibility for visually impaired users.

Enabled image search via object detection, allowing users to find images based on detected objects, leveraging cloud-based or local ML models.